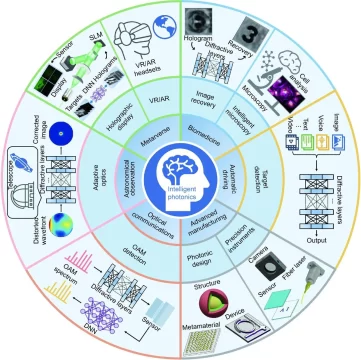

Photonics spans a broad range of technologies, including display and imaging photonics, sensing photonics, and silicon photonics. This article focuses on the first two categories: the use of photonics in microdisplays, XR projection systems, cameras, LiDAR, depth sensing, and thermal imaging. For years, photonics occupied a supporting role in technology roadmaps – important for displays, sensing, and imaging, but viewed primarily as a component layer rather than strategic infrastructure. That positioning has fundamentally changed. Photonics for perception and human interfaces has crossed a strategic threshold and is rapidly becoming foundational infrastructure for:

- AI-driven systems

- Autonomous platforms

- Spatial computing

- Defense electronics

- Advanced Semiconductor Manufacturing

The reason is simple but profound: modern systems no longer compete on compute alone. They compete on perception. And perception is created, constrained, and ultimately limited by optical data.

The quality, fidelity, and reliability of optical inputs increasingly determine what AI can see, understand, and decide. As a result, photonics is moving from an enabling technology to a differentiating one. This shift is reshaping product architecture, influencing system-level design decisions, and driving renewed investment and consolidation across the photonics ecosystem. It is contributing to one of the most active periods of M&A and vertical integration the photonics industry has experienced in over a decade.

From Component to Control Point

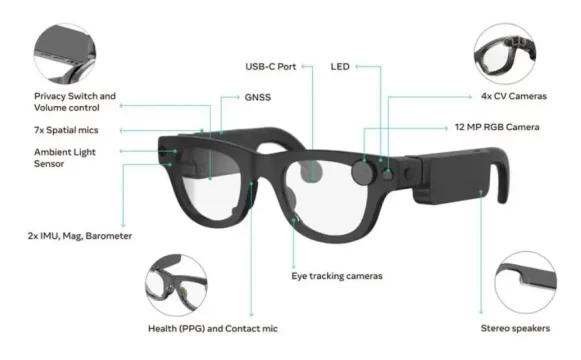

You can see this clearly in the strategies of companies like Apple. Vision Pro isn’t defined by a single sensor, but by a tightly integrated optical stack that includes multiple cameras, depth sensing, eye tracking, and spatial awareness working together. Spatial computing only works when optical data is dense, synchronized, and trustworthy, and when display systems can render content seamlessly into the user’s field of view. Software alone cannot compensate for weak optical inputs or poor display integration.

The same logic applies in autonomy. Waymo has repeatedly emphasized that safe autonomy depends on rich, redundant sensing. Cameras, LiDAR, depth sensors, and perception-grade imaging form the backbone of real-world decision-making. AI models trained on poor or inconsistent optical data simply cannot generalize safely at scale.

This pattern repeats across sectors. Photonics is no longer enabling innovation at the margins. It is shaping what systems can realistically do.

Why Urgency is Accelerating Across the Ecosystem

What makes the current moment different from previous photonics hype cycles is that pilots are now converting into sustained, large-scale deployments. XR devices are shipping in the millions. Autonomous fleets are operating commercially. Industrial vision systems are being deployed across global factories. Semiconductor manufacturing is pushing deeper into regimes where optical precision determines feasibility.

Once platforms cross this threshold, priorities shift dramatically. Performance alone is no longer enough. Buyers begin to care deeply about yield stability, manufacturability, long-term reliability, and cost-down trajectories. This is where many photonics companies either mature into strategic assets or get left behind.

As a result, strategic buyers are moving earlier and more aggressively to secure optical IP, sensor subsystems, and manufacturing capability. This explains the surge in photonics M&A activities across AI optics, EO/IR sensing, LiDAR, metrology, lasers, and silicon photonics.

The New Buyer Landscape Reshaping Photonics

Big technology platforms have become some of the most aggressive buyers in photonics, particularly in XR and spatial computing. Companies such as Meta, Google, and Snap increasingly recognize that controlling the photonics layer, both for sensing and display, is essential to controlling user experiences.

Waveguides, laser beam scanning engines, microdisplays, and compact projection systems, eye tracking optics, and depth sensors are not just supply chain items; they determine form factor, brightness, power consumption, field of view, and comfort. Owning or tightly controlling these technologies reduces dependency and increases differentiation as devices move toward mass-market scale. Aerospace and defense buyers approach photonics with a different but equally strategic lens. Companies such as Teledyne and Leonardo DRS have steadily expanded their optical portfolios to support EO/IR sensing, thermal imaging, SWIR, and laser-based systems. These buyers value manufacturability, qualification, and supply chain security as much as raw innovation.

In semiconductor equipment and industrial technology, photonics is inseparable from progress. Advanced lithography, inspection, and metrology are fundamentally optical problems. Companies such as ASML and Zeiss demonstrate how deeply integrated optics has become into manufacturing platforms. As tolerances tighten and nodes advance, optical systems determine yield behavior, throughput, and economic viability. This makes photonics one of the most defensible and strategic technology layers in the entire semiconductor value chain.

Automotive, robotics, and mobility platforms represent another rapidly expanding buyer group. Tier-1 suppliers and OEM ecosystems increasingly rely on optical perception to support advanced driver assistance, partial autonomy, and robotic navigation. Depth cameras, LiDAR sensors, and advanced imaging are no longer experimental. They are being designed into production systems with automotive-grade reliability requirements. This transition raises the bar for optical data consistency, calibration stability, and high-volume test readiness.

Competing Photonics Technologies Defining the Next Decade

Competition is intensifying across several key technology fronts. In XR and near-eye displays, waveguide architectures and laser-based projection systems are racing to balance brightness, efficiency, and manufacturability. MicroLED-based display engines promise dramatic gains in luminance and power efficiency but introduce new yield and test challenges at scale.

In AI sensing, camera systems are evolving beyond resolution into dynamic range, spectral sensitivity, synchronization, and depth accuracy. LiDAR technologies continue to diversify, with different architectures trading off range, resolution, cost, and robustness. Thermal and SWIR imaging are gaining importance for perception systems that must operate reliably in low-light, obscured, or high-contrast environments.

Learn More About The Latest Trends and Challenges in the Optical I/O industry

Across all of these technologies, one theme dominates:

Performance gains are meaningless if photonic components cannot be produced consistently, optical data cannot be measured accurately, and results cannot be correlated across manufacturing and lifecycle stages.

Photonic Data Quality is Now the Bottleneck

The industry is confronting an uncomfortable truth.

AI accuracy, reliability, and safety are no longer constrained primarily by model architecture or compute power. They are constrained by input data quality. XR experiences are no longer limited by rendering capabilities alone, they are constrained by display brightness, field of view, and optical integration quality.

In photonics-heavy systems, this means optical sensor data quality parameters and display performance metrics all propagate upward into AI models. No amount of downstream processing can fully compensate for degraded optical inputs or poor display integration.

This is why photonics companies increasingly face challenges that look less like hardware problems and more like data problems.

How do you correlate sub-device optical behavior to die-level results?

How do you manage retests, wavelength sweeps, and multi-port measurements?

How do you detect subtle shifts before they become yield loss or field failures?

The companies that solve these problems will not just build better optics. They will enable better AI and more compelling XR experiences.

The consolidation wave sweeping photonics is not driven by hype. It is driven by a recognition that optical systems now sit directly in the AI value chain. Better sensing optics mean cleaner data. Cleaner data means more accurate AI. More accurate AI means safer autonomy, more compelling XR, higher-yield manufacturing, and more reliable infrastructure.

Scaling photonics requires more than innovation. It requires yield intelligence.

yieldWerx helps teams transform complex optical test data into actionable insight across the entire manufacturing lifecycle. Contact us today to learn more.

Written by M. Rameez Arif, Content & Communication Specialist at yieldWerx. Edited by Tina Shimizu, Content Strategist at yieldWerx.

FAQs

What is Optical Data?

Optical data is information captured through light-based systems, including images, depth measurements, spectral data, and time-of-flight signals. This data forms the raw input for AI models in applications such as autonomy, XR, robotics, industrial inspection, and defense.

What is AI sensing?

AI sensing refers to sensor systems designed specifically to support AI workloads. These systems emphasize data fidelity, synchronization, calibration stability, and repeatability so that AI models can make accurate, real-time decisions based on physical-world inputs.

What is Depth Sensing?

Depth sensing measures the distance between a sensor and objects in the environment. It enables 3D perception, spatial mapping, and object localization. Depth data is essential for XR, robotics, and autonomy, where understanding spatial relationships is critical.

What is LiDAR?

LiDAR (Light Detection and Ranging) is a sensing technology that emits laser pulses and measures their return time to calculate distance. It produces precise 3D maps of the environment and is widely used in autonomous vehicles, robotics, and industrial mapping.

What is EO/IR sensing?

EO/IR (Electro-Optical / Infrared) sensing combines visible-light imaging with infrared detection. It is used in defense, aerospace, industrial inspection, and autonomy to detect heat signatures, operate in darkness, and see through obscurants like smoke or fog.

What is SWIR Imaging?

SWIR (Short-Wave Infrared) imaging operates in wavelengths just beyond visible light. It enables material discrimination, moisture detection, and improved contrast in challenging environments. SWIR is increasingly important for AI-driven inspection and defense applications.

What is XR (AR/VR/MR)?

XR (Extended Reality) encompasses Augmented Reality, Virtual Reality, and Mixed Reality. XR systems rely heavily on optics, sensors, and depth perception to merge digital content with the physical world or create immersive virtual environments.

What are Microdisplays?

Microdisplays are very small, high-resolution displays used in XR devices. Technologies include OLED, LCOS, and MicroLED. MicroLED microdisplays promise high brightness and efficiency but present significant manufacturing and yield challenges.

What is Spatial Computing?

Spatial computing refers to systems that understand and interact with the physical world in three dimensions. It combines optical sensing, depth perception, and AI to enable applications like immersive AR, object interaction, and real-world mapping, as seen in platforms like Apple Vision Pro.

What is Silicon Photonics?

Silicon photonics integrates optical components—such as waveguides, modulators, and detectors—onto silicon chips. It enables high-speed, low-power optical communication using semiconductor manufacturing processes.